AI Strategy Promotion Division

Digital Division

Yanmar Holdings Co., Ltd.

YANMAR Technical Review

Development of Agricultural Work Support Technologies Using AI and Edge Computing

Toward Simplified and Accessible Farming for All

Abstract

With the recent trend toward larger farm sizes and corporate ownership, producer organizations increasingly employ workers instead of relying on farm managers to perform tasks directly. As many of these workers lack experience, there is a growing need for task support technologies that enable consistent and efficient operations regardless of individual skill levels. In small-scale farming, the difficulty of transferring tacit knowledge to successors presents an additional challenge, highlighting the necessity for standardized criteria to guide decision-making. To address these issues, Yanmar has been developing agricultural support systems utilizing image recognition-based artificial intelligence (AI). This paper discusses the unique challenges associated with implementing image recognition AI in agricultural environments and presents solutions to these challenges, along with case studies demonstrating its practical application.

1. Introduction

The recent trend toward larger farm sizes and corporate ownership means that producer organizations increasingly operate their farms using employed workers(1). Given that many of these employees are new to the industry, how to achieve a uniform standard of work poses a challenge. This in turn is driving a need for task support technologies to ensure that work can be completed to a consistent standard regardless of who does it, without relying on the intuition and experience of individuals as in the past. As harvesting and grading make up a large proportion of working hours, technologies that support these activities can be expected to have major benefits for overall farm operations.

One solution identified by Yanmar for these applications is to equip agricultural work support systems with artificial intelligence (AI) for image recognition and it is working to develop this technology. Image recognition AI can make consistent judgments using images captured on the spot and provide this information to workers in ways that assist them in their work. Intended for on-farm use, the systems must not impede workers in doing their work and all features must be capable of operating in rural areas where wireless network reception is poor. To achieve this, the Yanmar system is implemented in the form of smart glasses and on other small edge devices suitable for on-farm use. It has also been designed for hands-free operation and use across a wide range of scenarios without relying on a network signal. This article reports on the progress of development and future plans, describes the technologies used in the system to overcome these challenges, and case studies of example applications.

2. Technologies Used to Overcome Challenges

2.1. Image Recognition AI

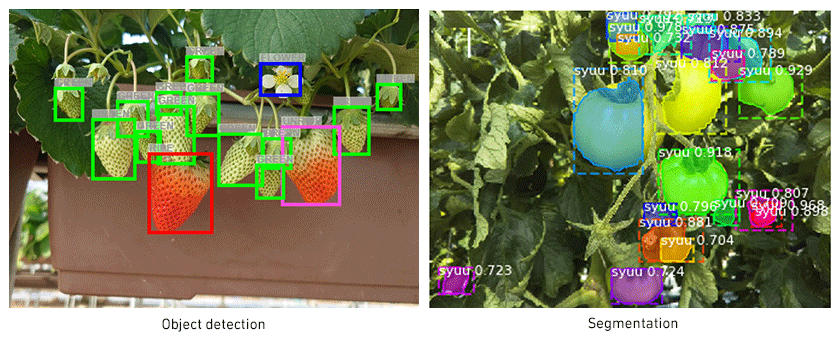

The uses of image recognition AI can be broadly divided into classification (the identification of what is shown in a given image), object detection (the detection of the objects visible in an image and the determination of their location and class), and segmentation (the determination of which class each pixel in the image belongs to). Object detection and segmentation are used in agricultural operations because images often show multiple instances of vegetables or other produce being grown. As the AI models for segmentation tend to be larger than those for object detection and take longer to obtain a result, segmentation is preferable when detailed vegetable shape information is needed and object detection when it is not (Figure 1).

2.1.1. Color Evaluation

The color of a fruit or vegetable can be categorized into different levels by using an object detection model to determine its shape and color and assign it to a corresponding class. As this process of determining color can be used in agricultural applications to estimate ripeness, the function can reduce mistakes about whether to harvest produce, even when the work is done by inexperienced staff.

2.1.2. Shape Evaluation

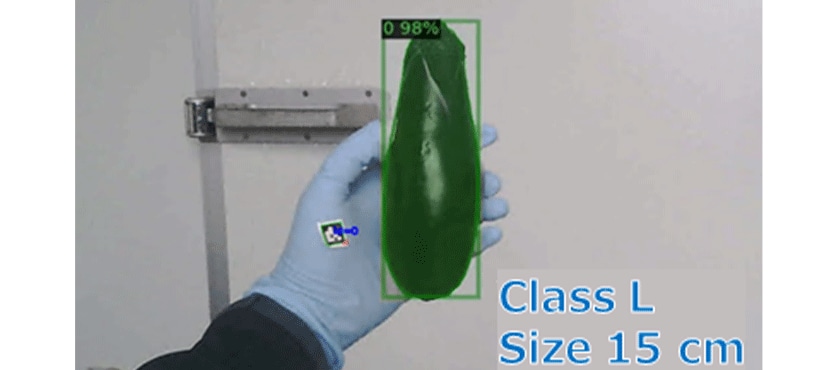

As segmentation models classify each pixel in an image, they can provide quantitative shape information in the form of pixel counts for things like object outlines, vertical and horizontal length, and surface area. If the image scale is known, these pixel counts can be converted into actual sizes. Yanmar has devised a technique for estimating the actual size of produce in this way. It works by placing an augmented reality (AR) marker on the gloves worn by workers and then comparing the pixel count for a fruit or vegetable with that for the known dimensions of the marker (Figure 2).

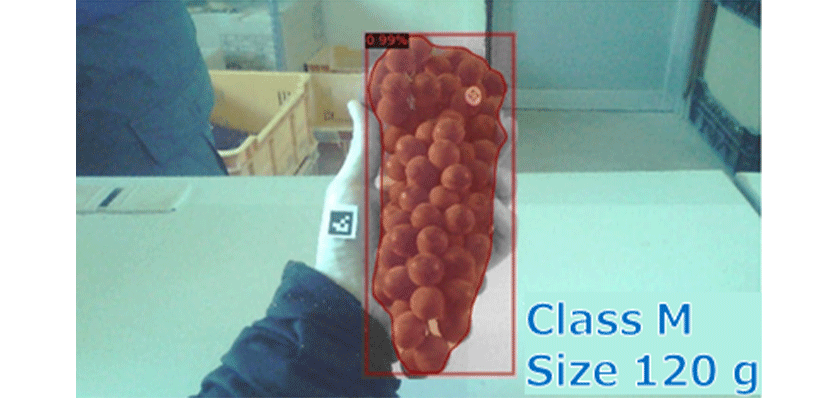

A statistical estimate of the weight can also be obtained from the estimated size by collating datasets of produce size and weight measurements and determining the correlation between them beforehand. For example, it is known from analysis that the weight of a bunch of grapes has a positive correlation with its surface area in an image. This provided the basis for a weight estimation technique for grapes that uses a regression model in which the estimated surface area of the bunch serves as an explanatory variable (Figure 3).

2.2. Edge Computing

Performing inference (obtaining the response of a pre-trained AI model to new data on the basis of the knowledge with which it was trained) requires significant computational capacity, which is provided by graphics processing units (GPUs). This is why systems that incorporate AI are typically implemented in such a way that all the associated processing is done on cloud servers. However, because edge devices and the cloud have different performance characteristics, especially regarding computing capacity and response time, the specific circumstances need to be considered when choosing which to use. Factors to consider include cost and where the system will be used (see Table 1). A particular concern is that reliance on an always-on connection to the cloud is undesirable as agricultural operations are often located where wireless signal reception is poor. For this reason, Yanmar built its system to utilize edge computing, thereby enabling AI inference to be executed on-site. This involved downsizing the AI models and using a less computationally intensive framework to allow for the much lower computing capacity of edge devices compared to servers operated by the company or running in the cloud.

Table 1 Comparison of Edge Device and Cloud Performance

| Edge device | Cloud | |

|---|---|---|

| Computing capacity | Low | Variable |

| Response time | Fast | Slow |

| Internet connectivity | Not required | Required |

3. Example Applications

This section describes the system architecture of Smart Guide in Farming, an agricultural operations support system that uses the technologies described above, and presents two example applications. The results of response time measurements are also presented to provide an example of how the practicality of edge computing is assessed.

3.1. Smart Guide in Farming System Architecture

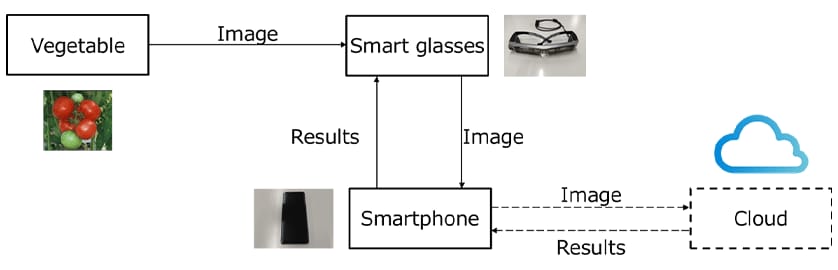

Smart Guide in Farming is a support system for agricultural operations that was developed to address the problem of how to ensure workers maintain a consistent quality of work. The system is equipped with the technologies described above and is implemented on smart glasses and a smartphone (Figure 4). The use of smart glasses as a user interface allows for hands-free image recognition and access to information. To assist the worker, images of produce obtained by the camera on their smart glasses are analyzed using image recognition AI and the results are displayed on the glasses. The smartphone acts as an edge device that executes inference processing for image recognition by the AI and generates the information to display on the smart glasses. It is also able to offload inference processing to the cloud when a high level of computational capacity is required.

3.2. Analysis of Multiple Fruits or Vegetables to Assess Readiness for Harvest

The first example application uses the fruit color evaluation technique described in section 2.1.1 to assess readiness for harvest. This involved the development of object detection models for strawberries, grapes, and two varieties of Asian pear (cultivars ‘Kosui’ and ‘Hosui’). These were used to assess readiness for harvest. The worker views the results of the assessment in their smart glasses. Using an Asian pear as an example, fruits detected by the system are assigned the label “Just” if they are deemed to be ripe and “Early” if not. By providing the worker with this information, the aim is to prevent untimely harvesting (Figure 5).

The accuracy of harvest readiness assessment was verified by calculating mean average precision (mAP), an indicator used to assess how accurately an object detection model detects objects. The results indicated that the system exceeded an mAP of 0.8 across all fruit types, a result that was achieved by creating a separate object detection model for each variety (Table 2). This compares to reported mAP performance by major image recognition AIs in the 0.66 to 0.76 range when applied to Pascal VOC, a dataset widely used in deep learning research(2). While it is not realistic to explicitly link mAP results to real-world task performance, the results do show that the system achieves mAP values that are equal or better than those obtained in other frontline research.

Table 2 Harvest Readiness Assessment Results for Different Fruit

| Asian pear (Kosui) | Asian pear (Hosui) | Grapes (Delaware) | Strawberries | |

|---|---|---|---|---|

| AI assistance function | Harvest readiness | Harvest readiness | Ripeness estimation | Harvest readiness |

| Label (classification) | Early / Just | Early / Just | Coloring: 0%, 20%, 40%, 60%, 80% or 100% | Red / Not red |

| No. of images in training dataset | 301 | 536 | 5,463 | 525 |

| No. of images in test dataset | 35 | 135 | 2,256 | 174 |

| Accuracy (mAP) | 0.93 | 0.84 | 0.88 | 0.80 |

3.3. Grape Weight Estimation

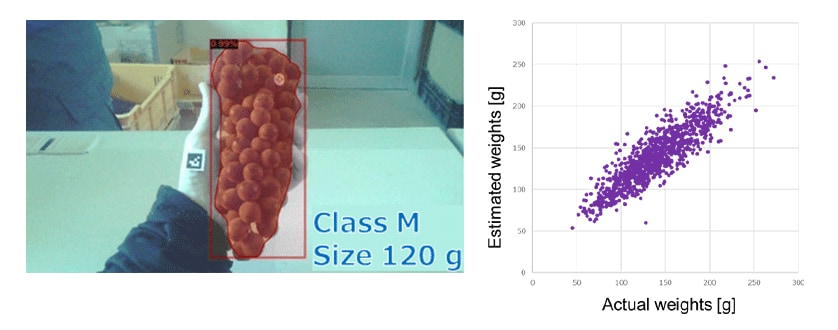

The second example application uses the shape evaluation described in section 2.1.2 to estimate the weight of grapes. This is done using the outputs of a segmentation model and regression model created for the purpose. The system obtains an on-the-spot estimate of weight by having the farm worker use Smart Guide in Farming to capture images showing the grapes together with an AR marker (shown on the left of Figure 6). A strong correlation was also demonstrated between the estimated and actual weights (shown on the right of Figure 6).

The accuracy of weight estimation using Smart Guide in Farming was verified by calculating the concordance rate for the estimated and actual weights and by comparing this with visual estimates made by four inexperienced workers. The results show that the concordance rates for Smart Guide in Farming are superior to those of the workers by a statistically significant margin (Table 3). This indicates that, if used appropriately, the system should be able to assist inexperienced workers.

Table 3 t-Test Results

| Variable | Smart Guide in Farming (n = 1082) | Inexperienced workers (n = 80) | p value | 95% confidence interval for difference in mean [%] | ||

|---|---|---|---|---|---|---|

| Mean [%] | Standard deviation [%] | Mean [%] | Standard deviation [%] | |||

| Concordance rate | 89.4 | 9.32 | 84.9 | 9.55 | 0.0000461 (< 0.05) |

[2.34, 6.76] |

- *Welch t-test without assuming equal variance (one-tailed test with a significance level of 0.05)

- *Concordance rate [%] = 100 − Mean absolute percentage error (MAPE)

3.4. System Response Time

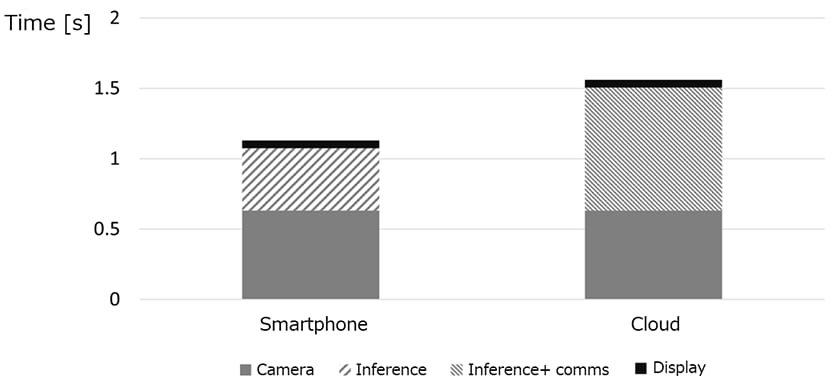

To assess the practicality of using the edge computing described in section 2.2, the system response times were compared for running the same downsized object detection model on a smartphone and in the cloud. Here, the system response time was defined as the sum of the times taken for image capture, inference processing, data transmission, and AI results display. Note, however, that because the image capture and AI results display are both executed on the smart glasses or smartphone, the times for these are the same in either case.

The time measurements indicated a system response time of 1.125 s using the smartphone and 1.555 s when inferencing was executed in the cloud (Table 4 and Figure 7). Use of a smartphone involves a trade-off in that, while AI inference takes longer due to having less computational capacity than the cloud, it also does not require any data transmission time. In the object detection task, this gave edge computing a faster system response than the cloud.

Table 4 Breakdown of System Response Times

| Image capture [s] | Inference [s] | Data transmission [s] | AI results display [s] | Total system response time [s] | |

|---|---|---|---|---|---|

| Smartphone | 0.63 | 0.44 | 0 | 0.055 | 1.125 |

| Cloud | 0.87 | 1.555 | |||

4. Conclusions

This article has described a support system for agricultural work that uses image recognition AI to ensure more consistent work quality by farm workers, something that has become more of an issue with the trend toward larger farm sizes and corporate ownership. When used to assess the readiness of fruit for harvest and for weight estimation, the system worked well enough in both cases to be useful to inexperienced workers. Meanwhile, a comparison of system response times conducted to assess the practicality of edge computing found that its performance compared favorably with that of the cloud. In fact, good response times were obtained for both system configurations, indicating that it can provide useful information to farm workers without being a cause of stress.

AI is making rapid progress across a range of applications, not just image recognition, to the extent that it is expected to surpass humans in the near future in terms of both the accuracy and speed of its decision-making. In addition to identifying the issues relevant to farm work assistance and edge computing, ongoing technology development will be essential if AI is to become a useful tool for humans and demonstrate its capabilities in the agricultural workplace. Yanmar intends to continue with the further development and implementation of AI to provide people who work in agriculture with useful AI and AI-equipped products.

Acknowledgements

Part of the work described in this article was supported by the Bio-oriented Technology Research Advancement Institution’s “Development and improvement program of strategic smart agricultural technology” that ran from FY2022 to FY 2024, and was undertaken jointly with Meiji University and the Research Institute of Environment, Agriculture and Fisheries, Osaka Prefecture. The authors would like to take this opportunity to express their thanks.

References

- (1)Japan Greenhouse Horticulture Association: Survey and Examples of Large Greenhouses and Other Industrial Growing Systems, 2023.

https://www.maff.go.jp/j/seisan/ryutu/engei/sisetsu/attach/pdf/index-17.pdf, (accessed 2025-10-29). - (2)Neha, F., Bhati, D., Shukla, D. K., and Amiruzzaman, M. (2025): From classical techniques to convolution-based models: A review of object detection algorithms, IEEE 6th International Conference on Image Processing, Applications and Systems, 1-6.

Author